Context Rot: Why AI Gets Worse the Longer You Chat (And How to Fix It)

Have you ever noticed that AI gets worse the longer you talk to it?

I first noticed this when I was trying to fix bugs on Replit. I'd spin in an endless cycle of asking the agent to fix something and it would report back that it fixed it, even though it hadn't. I learned through trial and error that starting a new conversation often fixed the problem.

This isn't unique to Replit. I see it everywhere: ChatGPT, Claude Code, the Lovable agent.

Why is this? Recent research is shining a light onto why this happens. It's called "context rot." The more input we give a large language model, the worse it tends to perform. It might ignore some input or over-index on other input. How performance degrades is complex, but we are starting to get a better understanding of the common patterns.

With large language models, the quality of our inputs affect the quality of the output. There are a couple of ways we can affect the quality of the input:

- We can clearly specify what we want. (Often referred to as "prompt engineering.")

- We can provide the needed context so that the LLM can do what we want. (Often referred to as "context engineering.")

But we are learning that it isn't this simple. We also have to account for context rot. So we need to add a third lever to our list:

- We can manage the usage of the context window.

Today, I'm going to:

- explain what the context window is

- summarize the research on context rot so you understand how to optimize LLM performance

- show how you can learn about the context window and see what's in it

- provide actionable tips on how you can manage the context window when using LLMs in your day-to-day work.

If you are starting to use a command-line interface like Claude Code or Codex or you are using Claude Cowork to create your own AI workflows, this article will help you understand why LLM performance degrades over the course of the conversation and what you can do to prevent it.

If you are an audio/visual learner, start with this overview video and then dive into the rest of the article.

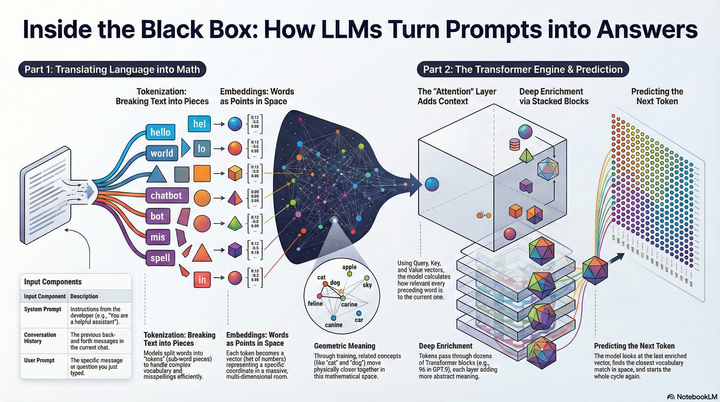

What is a context window?

The context window is the metaphor we use for what captures the input into a large language model. You can think about it as the model's short-term memory. It's everything that the model can work with at one time.

A context window has a fixed size. It's not infinite. The amount of input you can enter at once is constrained by the size of the context window. And the size varies from model to model.

| Model | Context Window |

|---|---|

| Claude Opus 4.5 | 200K tokens |

| Claude Sonnet 4.5 | 200K tokens (1M at usage tier 4) |

| GPT-5.2 | 400K tokens |

| Gemini 2.5 Pro | 1M tokens |

If you read my "How Does ChatGPT Work?" article, you learned that the input into the model includes more than just what you type in. Each application adds a system prompt that gets prepended to your user message. Depending on the application context, the system prompt can include tool descriptions (e.g. like from an MCP server), available skills, plugins, and much more.

In a back-and-forth conversation, the entire conversation history is often included in the context window on every turn. This is the only way the model can follow the conversation.

Even though many models have large context windows, in practice, the context window can fill up quickly. And we'll see that filling the context window up can cause problems.

There are many factors that constrain the context window size including the amount of compute required to process the input, the amount of memory required to remember values during processing, and the size of input used during training.

What is context rot?

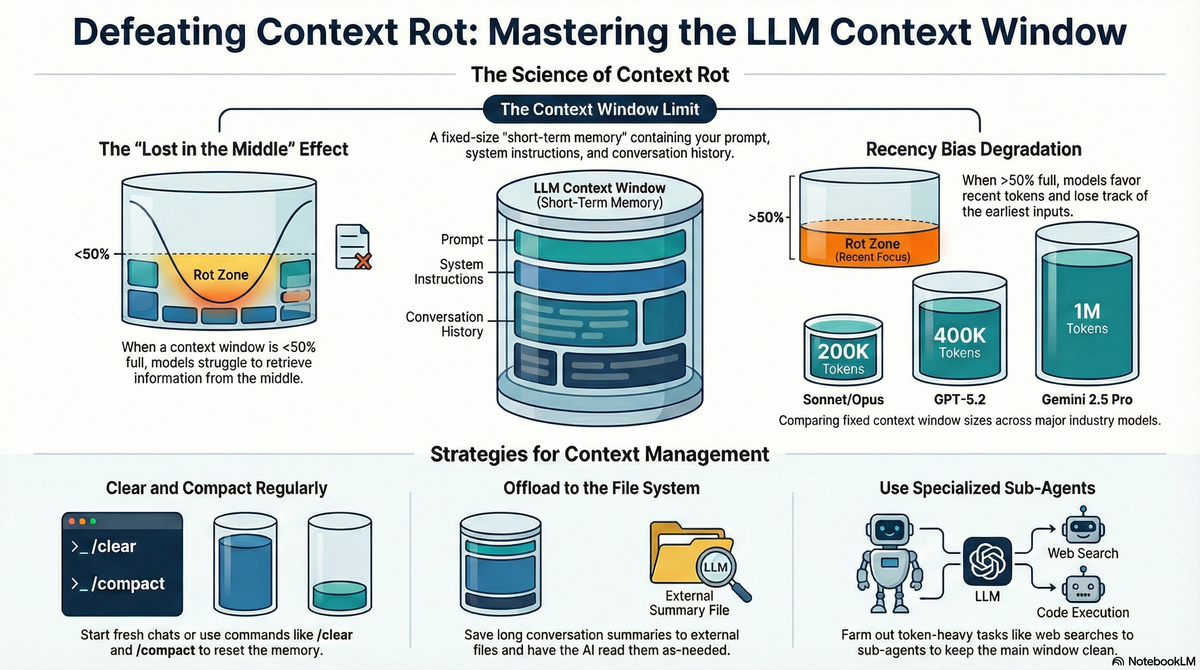

How context degrades varies based on how full the context window is.

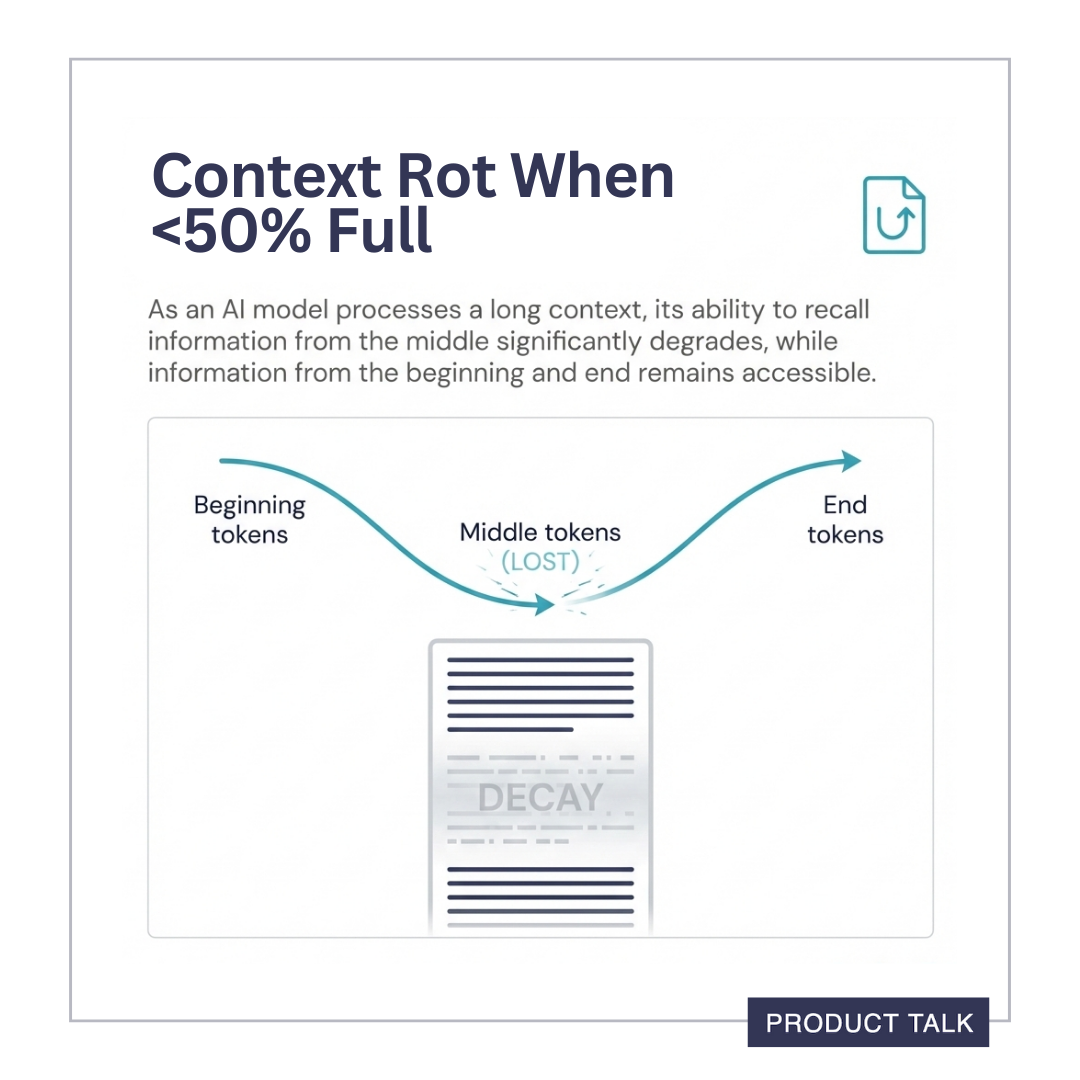

In November 2023, Liu et al released a paper called "Lost in the Middle" that introduced the first evidence of context rot. The authors found that as the context window filled up, models started to favor tokens at the start of the input and tokens at the end of the input. Tokens in the middle "got lost." Hence the name "Lost in the Middle."

In 2025, several more researchers published papers exploring this pattern and the term "context rot" was coined. Here's a quick synopsis of what studies to date have found:

- The initial Liu et al (2023) research used simple "needle-in-a-haystack" tasks to test context degradation. In a "needle-in-a-haystack" task, the model is asked if a sentence ("the needle") is in the context ("the haystack"). The study found that as the context window filled up, models performed worse on these simple tasks.

- Paulsen (2025) showed that context degraded over a wide variety of task types (not just "needle-in-a-haystack" tasks) and often with far fewer tokens for more complex tasks.

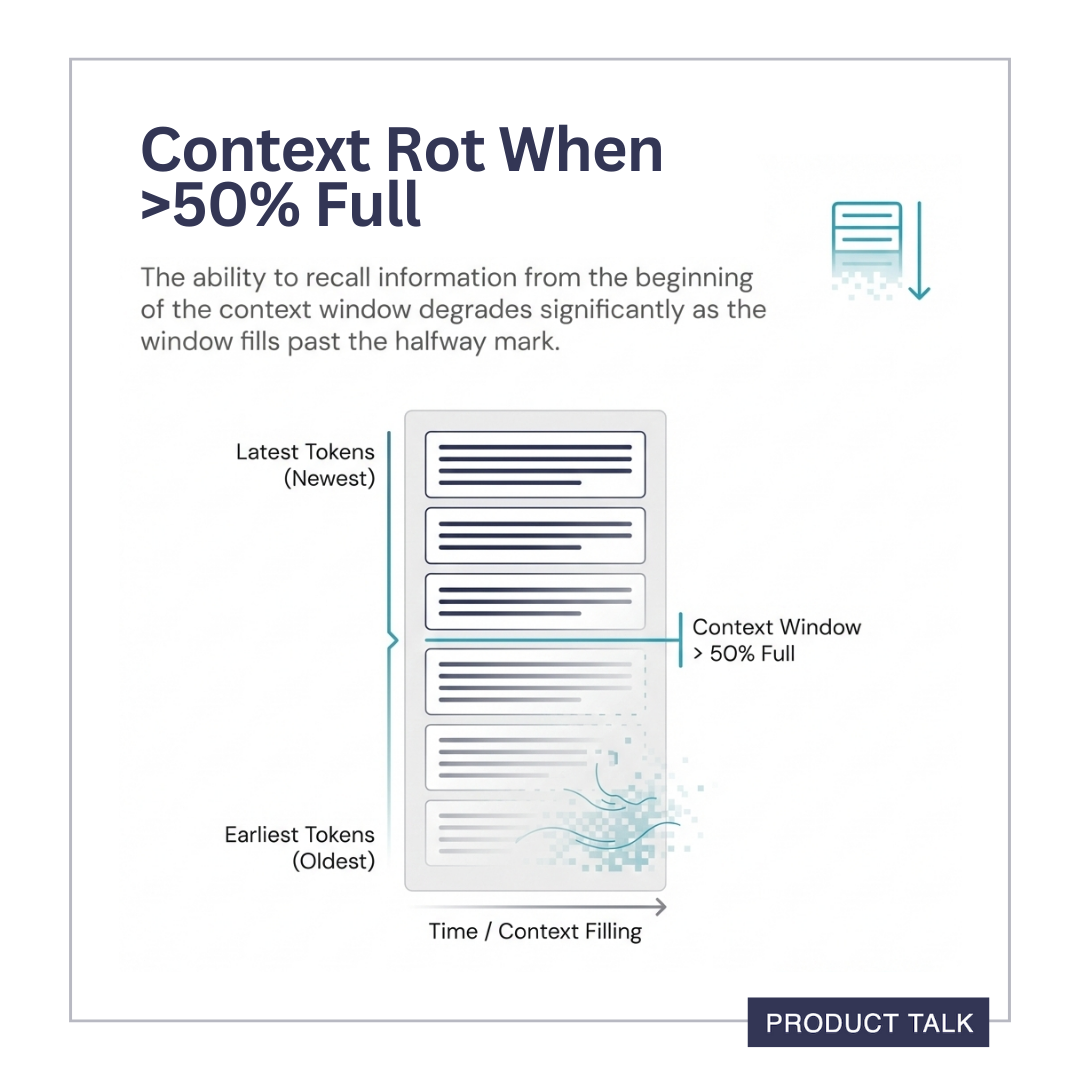

- Veseli et al (2025) found that the U-shaped pattern (where the LLM favors tokens at the beginning and the end) that Liu et al (2023) found only persists when the context is less than 50% full. When the context is greater than 50% full, Veseli et al (2025) found a different pattern: Context degrades by distance from the end, where the LLM favors more recent tokens, then middle tokens, over early tokens.

- Researchers theorized that the models were struggling with retrieval, meaning the model couldn't find the needle in the haystack. But Du et al (2025)—through some clever experiments—showed that it's not a retrieval issue. It's simply a function of the input length. In one of their more fascinating experiments, they replaced all of the non-"needle" tokens in the input with blank spaces. Their thinking was if this was a retrieval problem, the needle should now be obvious—but they still saw the same evidence of context degradation in these modified tasks.

So what does all of this mean?

Even with a large context window size, we don't want to fill it up. Performance degrades as it fills up. And it degrades in different ways.

- When the context window is less than 50% full, the model will lose tokens in the middle.

- When the context window is greater than 50% full, the model will lose the earliest tokens.

This research helps me understand why Claude sometimes ignores my CLAUDE.md rules. And I now have a clear way to fix it. More on that in a bit.

It's Hard to Manage Context Using LLMs in the Web Browser

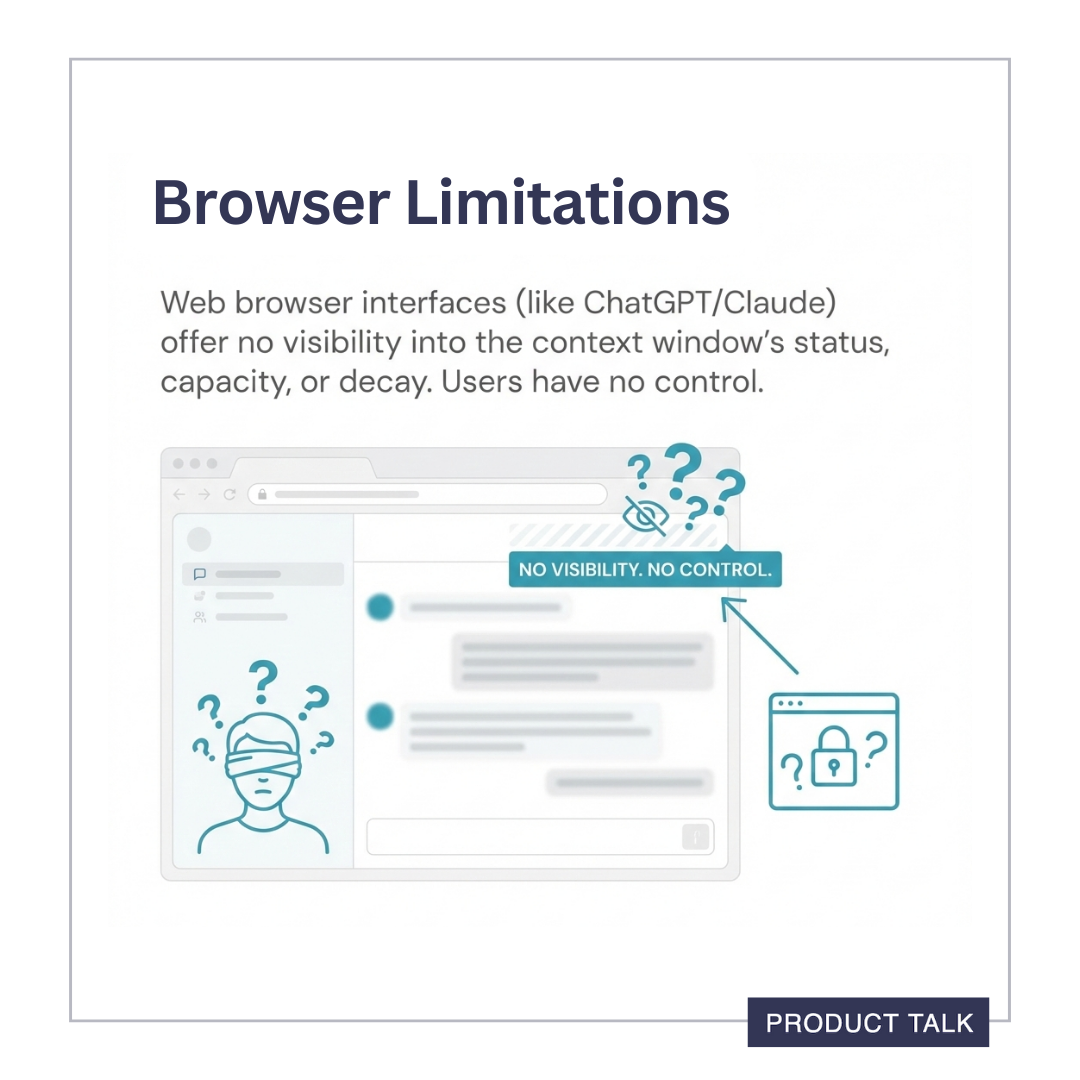

First, we have to acknowledge a major limitation of using ChatGPT, Claude, or Gemini in the web browser. These applications give us no visibility into how full the context window is.

You might have used ChatGPT since the day it came out and not even known what a context window is. That's a problem.

In fact, these tools make it seem like the context window is infinite, as they allow you to chat forever. But now you know they aren't. Context degrades over time. The longer your chat gets, the worse the model will perform.

It also means you have little control over what goes in the context window (beyond what you type), how and when to condense it or clear it, or even know when you might need to do so. That means you can't influence how the model performs. Your only tool for managing context rot in a web browser is starting a fresh chat.

Starting a new chat clears the context window. And you should do this often. If you are working with an LLM in your web browser, start a fresh chat whenever:

- You start a new topic.

- The model does something problematic and you want it to try again.

- The conversation starts to get long (e.g. more than 15 messages). Ask the model to summarize the conversation and use the summary to start a fresh chat.

These tips will help you keep the context window small.

But we can do so much more when we can see what's in the context window, how full it is, and know exactly when to mitigate it. This is one of the things I love about Claude Code.

Claude Code Gives Full Visibility Into the Context Window

Learning about the context window and how to manage it is one of those skills that we are all going to have to develop as we learn to build AI products. And using Claude Code day in and day out is teaching me how to do exactly that.

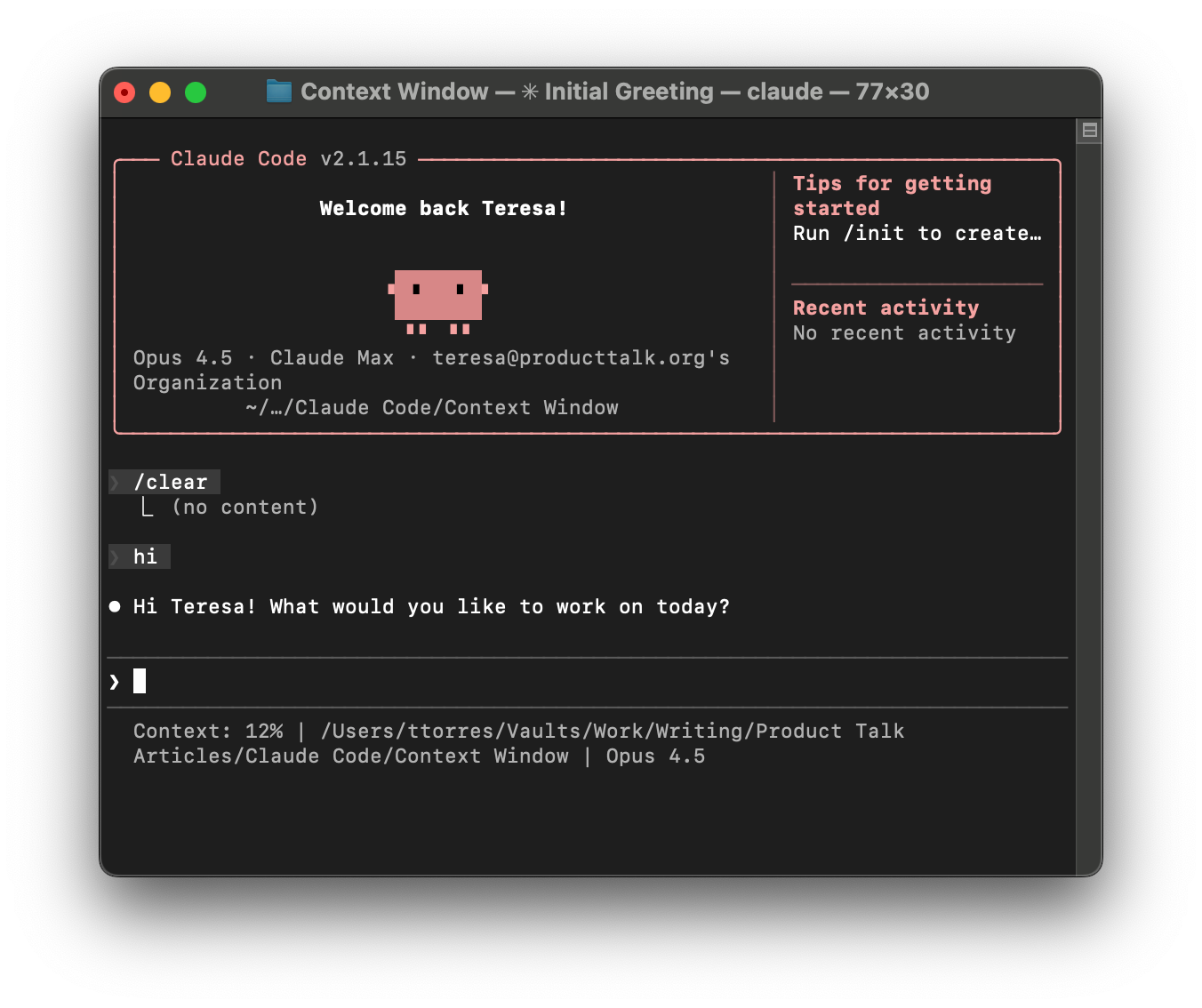

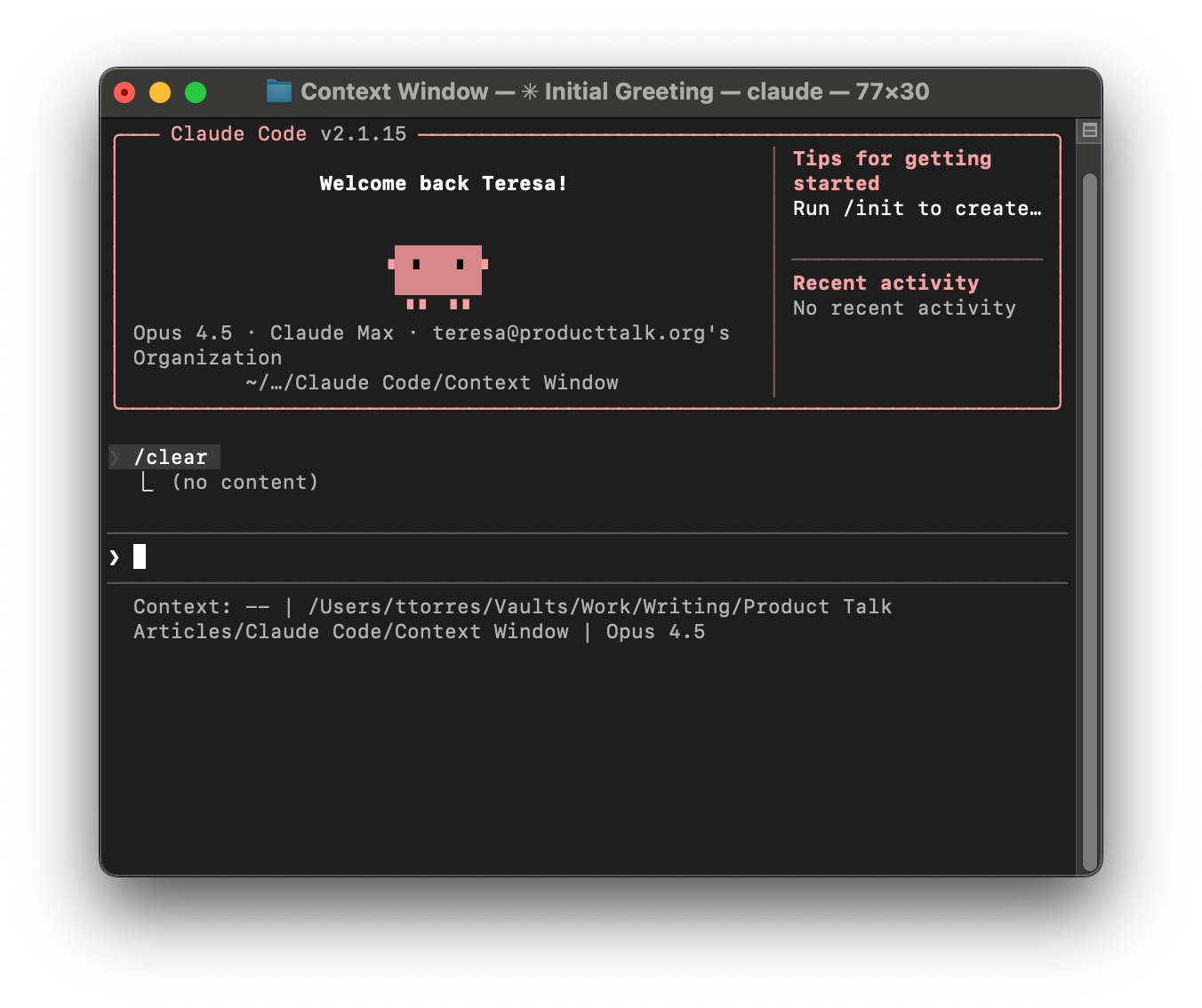

Here's why: Claude Code tells me at all times how full the context window is. I see a percentage right below my prompt line.

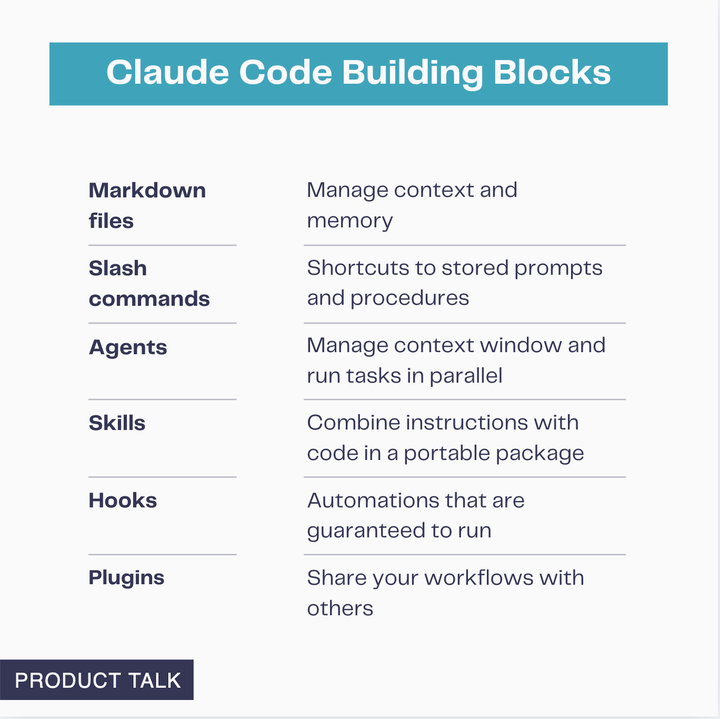

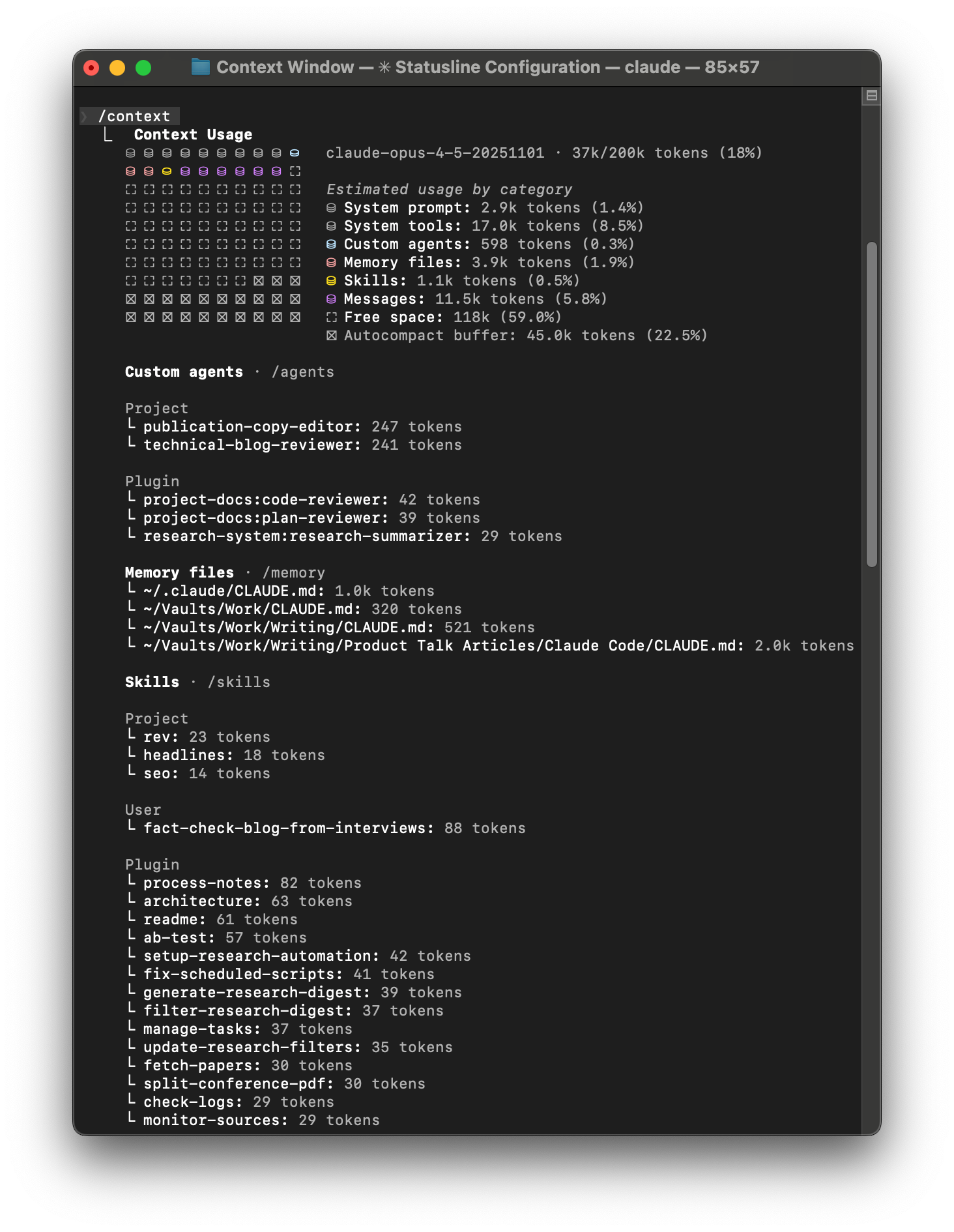

Similarly, I can use the /context command to examine what's in the context window. And I can use the /clear command to clear the context. I can also use the /compact command to tell Claude how to compact the conversation.

These tools are all built in to Claude Code by default. Claude will also automatically compact the conversation when the context window starts to get full. But I like having access to the tools myself.

Being able to see how full the context window is and being able to see how the model performs at different percentages helps me hone my judgment on where I want the context window to be for different tasks.

For example, I don't worry too much about how full the context window is when I ask the agent to create a new task in my task management system. That task is simple. Claude is simply creating a task file based on the contents I give it. But during planning (a much more complex task), I have the agent update a plan file so that I can continuously /clear the context and get the best performance from the model throughout the planning session.

By the way, if you haven't tried Claude Code and want to, check out my series:

- Claude Code: What It Is, How It's Different, and Why Non-Technical People Should Use It

- Stop Repeating Yourself: Give Claude Code a Memory

- How to Use Claude Code Safely: A Non-Technical Guide to Managing Risk

- How to Choose Which Tasks to Automate with AI (+50 Real Examples)

- How to Build AI Workflows with Claude Code (Even If You're Not Technical)

- How to Use Claude Code: A Guide to Slash Commands, Agents, Skills, and Plugins

I have not received any compensation from Anthropic for writing this series. And you can trust that if that ever changes, I will disclose it. This is not only required by the FTC here in the US, but I strongly believe it is the right thing to do. You can count on me to do so.

With this foundation, let's now look at how you can effectively manage the context window in Claude Code. We'll cover how you can:

- Monitor the context window percentage

- Examine the contents of the context window

- Keep your CLAUDE.md small, but effective

- Use the file system to offload conversation context

- Use /compact and /clear effectively

- Add agents, skills, and plugins without filling the context window

- Expand the context window by offloading token-intensive tasks to sub-agents

- Create token-efficient MCP servers

- Manage "lost in the middle" and recency bias token loss in long conversations

Product Talk is a reader-supported publication. These rest of this article is for paid subscribers. If you haven't already, subscribe to get access.