Customer Interview Analysis: Where AI Helps and Hurts

It's easy to get overwhelmed by continuous customer interviews.

When you conduct a customer interview every week, the data starts to pile up. You collect hours of transcripts. If you aren't disciplined to synthesize each interview as you go, you can quickly fall behind.

I have heard countless teams say, "We need to stop interviewing so we can catch up on what we've already learned." This is always a red flag. Many teams stop and never start again.

But I get it. Interview synthesis is cognitively challenging. It takes time. And that's time that many teams simply don't have.

That's why I'm not surprised to see so many teams turn to generative AI for help with interview synthesis. But this worries me.

Before we get into why, we need to revisit our goal when continuous interviewing, how story-based interviews help us reach that goal, and what good synthesis looks like. Only then can we look at where AI synthesis helps vs. where it hurts.

The Goal of Continuous Interviewing: Develop a Rich & Deep Understanding of the Opportunity Space

In continuous discovery, we interview customers every week so that we can develop a deep and rich understanding of who our customers are, what their goals are, the context in which they strive for those goals, and what opportunities (needs, pain points, and desires) arise as they work towards those goals.

We learn about our customers by asking for stories about their past behavior. Goals, context, and opportunities emerge from those stories.

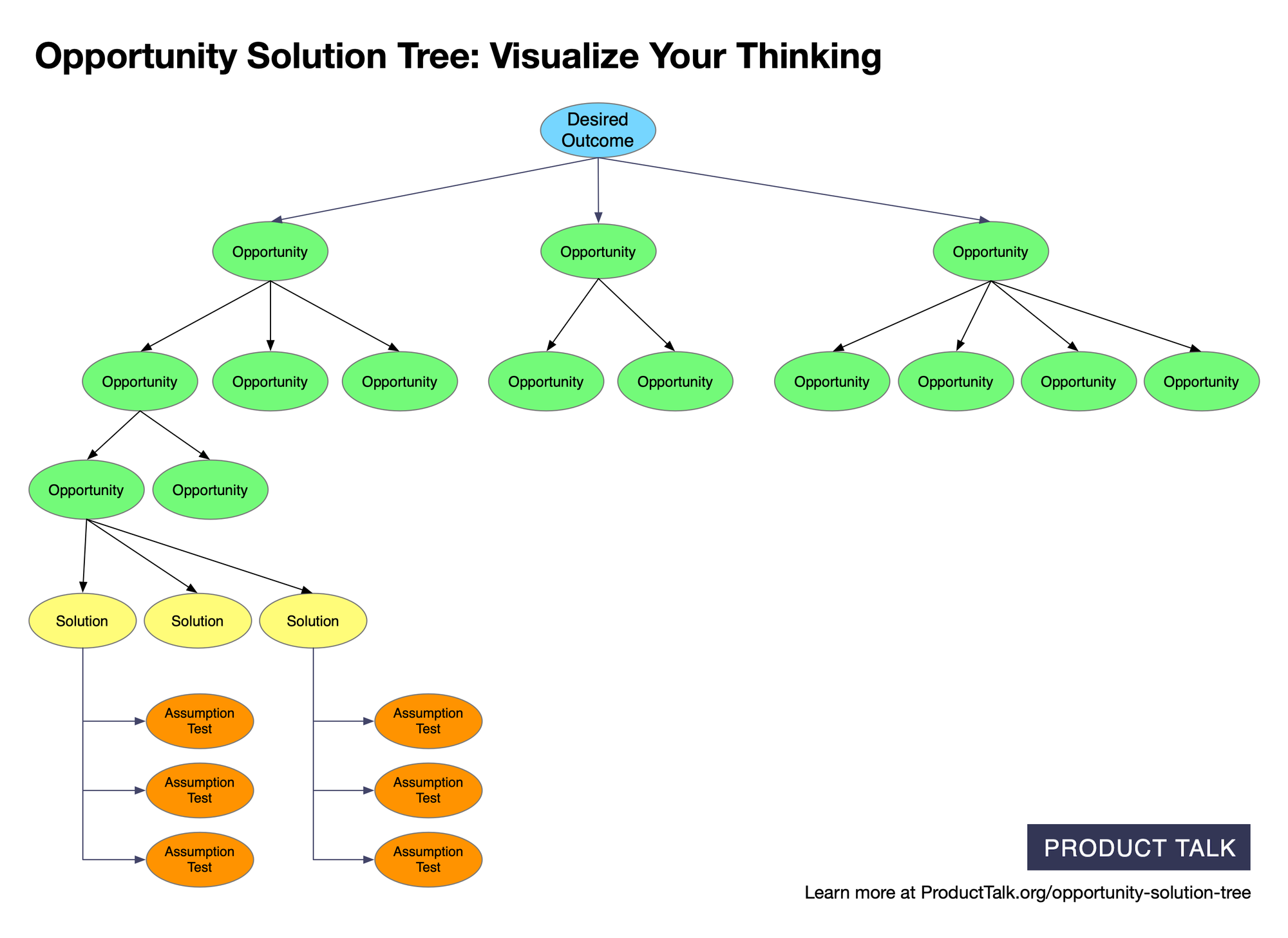

We synthesize each story by capturing what we learn on an interview snapshot. As we collect multiple stories, we synthesize what we are learning across those stories by mapping the opportunity space on an opportunity solution tree.

This is what good looks like. Your habits might vary, but conceptually, this is what we should all be striving for.

The Prerequisite Nobody Talks About: Deep and Rich Customer Stories

Many teams are not good at conducting customer interviews. They haven't invested in their interviewing skill.

Some teams misunderstand the purpose of an interview. They ask hypothetical questions about the future (e.g. "Would you use this?"). They spend their interview time getting feedback on their solutions instead of learning about their customers.

Some teams do ask about their customers' goals, context, and needs. But they ask direct questions out of context (e.g. "How do you decide what to watch?") or they ask speculative questions (e.g. "What do you typically watch?"). As a result, they get unreliable feedback from their customers.

The teams that do know to ask for specific stories about past behavior haven't honed their interviewing skills. They haven't practiced. So they collect shallow stories. They don't know what a deep and rich story looks like.

And here's the crux of the issue: If you aren't good at collecting a rich story about past behavior, no amount of AI synthesis can help you.

AI can't add missing context. It can't infer missing goals and motivations. It can't create actionable opportunities from shallow stories.

This is the same reason why humans struggle to synthesize their interviews. They haven't asked the right questions. They aren't sure what they learned. AI synthesis can't fix this.

This is exactly why I have spent so much time building Product Talk's Interview Coach. Good synthesis starts with a high-quality interview and the Interview Coach helps you improve your interviewing skills.

Learn how to collect a rich story and get access to Product Talk's Interview Coach:

A Quick Look at What Strong Story-Based Synthesis Looks Like

Now let's assume you have collected deep and rich customer stories. What does high-quality synthesis look like?

Remember, synthesis happens in two steps:

- We need to synthesize what we learned from each interview.

- We need to synthesize what we are learning across our interviews.

Single-interview synthesis (step 1) is critical. It helps us understand that each customer is unique. It encourages us to dive deep into a single story and to really understand that customer's goals, context, needs, and motivations.

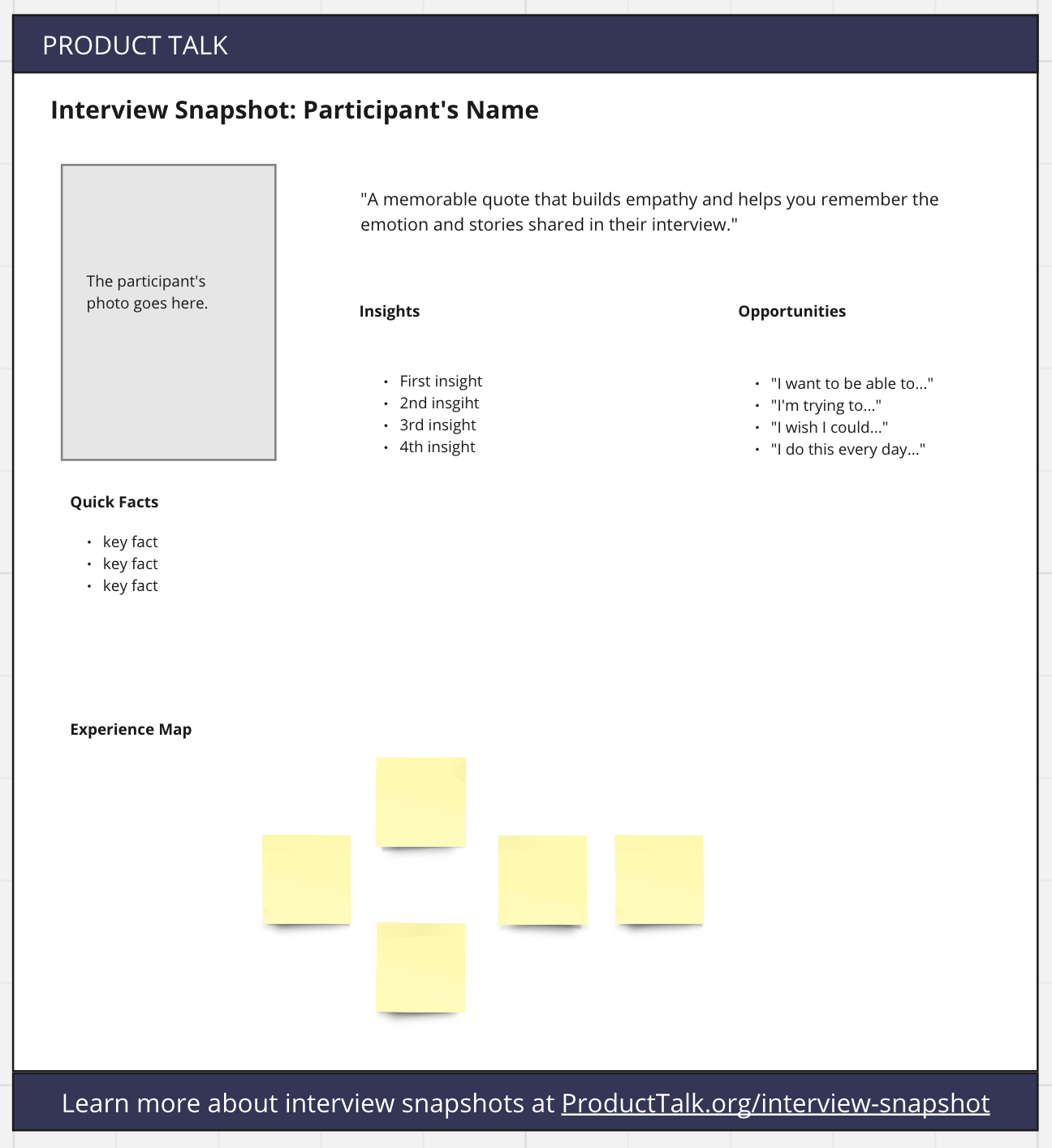

I like to use interview snapshots for single-interview synthesis. An interview snapshot helps to make our synthesis actionable. A snapshot includes several elements: quick facts that help us put the customer's story into context, a memorable quote that helps us remember the story, an experience map that represents the key moments in the story, and most importantly, a list of opportunities that we heard in the story.

When we synthesize across our interviews (step 2), we are looking at multiple interview snapshots and asking what we are learning from these stories that can help us reach our desired outcome. It is this step that helps us connect what we are learning from our customer stories to the impact our business is asking us to have.

The key moments from the various stories help us give structure to the opportunity space. The specific unmet needs, pain points, and desires (i.e. opportunities) that emerge from those stories help us understand how our products and services can help our customers.

This is what good interview synthesis looks like. With this foundation, we can now better ask, "How can AI help with synthesis and where does it hurt?"

Where AI Synthesis Gets It Wrong

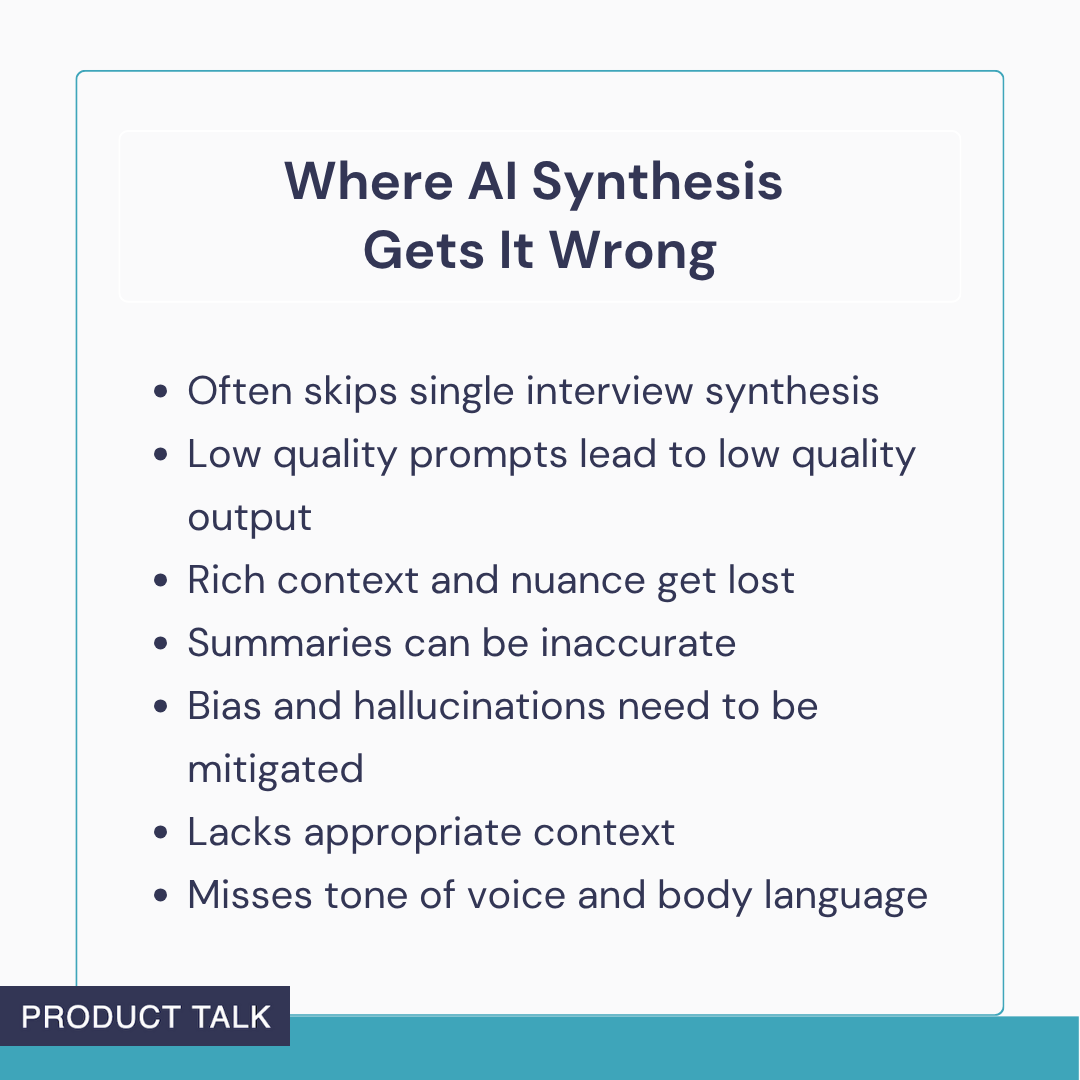

The biggest mistake I see teams make with synthesis (AI or human) is they combine the two synthesis steps into one. They synthesize across their interviews before they've synthesized each interview separately.

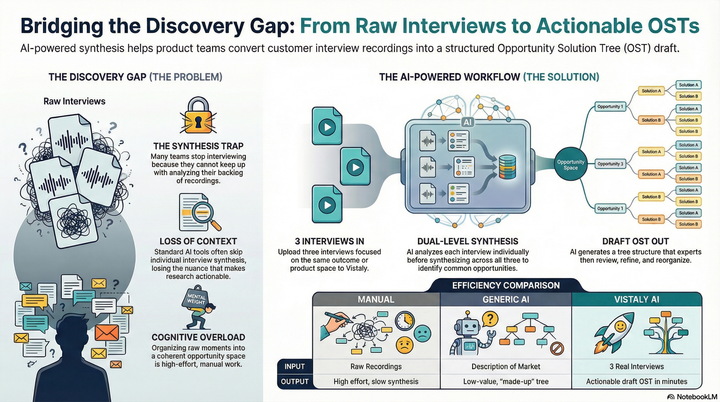

With AI synthesis, in particular, I see a lot of teams dump all their interview transcripts into an AI project or NotebookLM and ask the AI to tell them what they learned.

And this brings me to the second most common synthesis mistake, which is starting with a low-quality prompt. It's easy to ask AI to summarize what you learned from a set of interviews. Or to identify key themes or common pain points.

The challenge with these types of prompts is they lead to low-quality output that isn't likely to be actionable. We might get an easily digestible list of findings, but what should we do with those findings?

Suppose we learn what the three most common pain points are across our interviews. How would we address those pain points? You can't address a pain point well without knowing who experienced that pain point and the context in which it arose.

The interview snapshot is designed to turn a rich customer story into something a product team can act on. We capture opportunities in the context in which they occurred. We keep opportunities tied to specific customers. This allows us to verify if we fully addressed those opportunities after we release a solution.

If we simply ask for trends or themes or common pain points, we lose the rich context that allows us to develop appropriate solutions.

We can fix some of these issues by asking our AI tools to split synthesis into two steps: First synthesize each interview separately, then synthesize across our interviews. But many research tools don't work this way. So we have to do this manually ourselves.

Even when we take the time to do it manually, unless prompted well, AI can struggle with each synthesis step.

Summaries, in particular, are problematic. If you condense a 20–30 minute interview into a paragraph or two, you'll lose the context, nuance, and detail that makes that customer story unique.

One study found that large language models "frequently generated summaries that oversimplified or omitted critical details." Another study found that models struggle to "adequately represent the deep meaning" when summarizing text.

Another concern with AI-generated summarization, theme analysis, and sentiment analysis is the introduction of bias in the LLM's output. The first study found that pre-training data "introduced biases that affected summarization outputs" and that models often "defaulted to generalizations or inaccuracies."

AI-generated synthesis can also introduce hallucinations—including made-up material—in its summaries and analysis, even going as far as including fabricated direct quotes. I experienced this when asking ChatGPT to help me summarize the Lovable interviews that I conducted. About 30% of the direct quotes that ChatGPT included were either incorrect summaries of what the participant said or weren't in the source material at all.

Synthesizing an interview well often requires having the right business, product, and customer context to correctly interpret the customer's story. Unless we provide this context directly, the AI doesn't have the context it needs to correctly synthesize an interview.

Finally, most AI synthesis only works on the interview transcript. This means tone of voice and body language are not taken into account. Both can significantly impact meaning.

Many of these limitations can be overcome. But if you are simply uploading transcripts and asking AI to help you identify trends, you aren't getting as much value out of your interviews as you could.

The Impact AI Synthesis Has On You

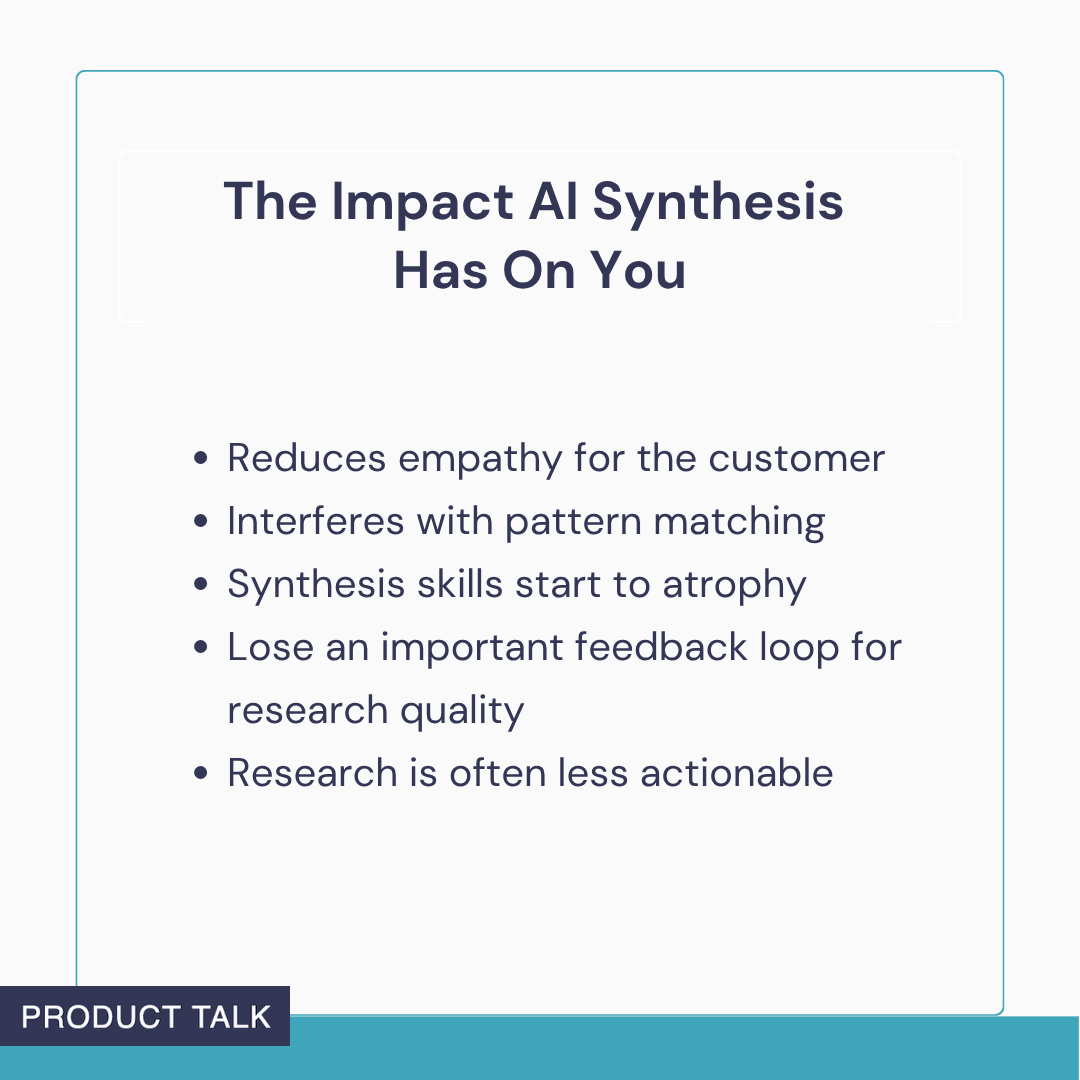

When we take the time to synthesize our interviews, we develop empathy for our customers. To create an interview snapshot, we have to dive deep into a single customer's story. We have to ask, "What happened?", "What did we really hear?", and "How can we help?" This can be cognitively challenging, but it's the cognitive effort that leads to empathy for our customer.

This deep synthesis work is also what unlocks pattern matching in our brain. Pattern recognition requires that we process and store disparate information. As our brain works to figure how and where to store each element, it finds connections with previously stored elements. When we outsource this work to AI, we don't benefit from these connections.

Even more concerning, when we outsource synthesis to AI, our synthesis skills start to atrophy from lack of use. And this has a double whammy effect. When we lose our synthesis skill, we also lose the ability to evaluate the quality of AI synthesis.

Two recent studies (see here and here) have found that experts are much better than novices at catching the subtle mistakes that LLMs tend to make. So not only do we let our own skills atrophy, the result is we get less value from AI synthesis.

Additionally, when we take the time to revisit an interview transcript, we tend to find mistakes in the questions that we asked. We notice where we could have followed up for more detail. We identify where we misinterpreted what the person said. In other words, interview synthesis is a feedback loop for how well we conducted the interview. When we outsource synthesis to AI, we sever this feedback loop.

Finally, we know that when teams rely on secondhand research reports rather than firsthand customer exposure, they're less likely to act on that research. My fear is that AI synthesis mimics this pattern of secondhand research, and might result in the research being less actionable.

Despite all of these concerns, AI synthesis can provide tremendous value. Let's look at how.

Three Ways AI Can Actually Help

There are three ways I am confident AI can help us today.

1. AI as a Notetaker

Most teams are already using AI to generate transcripts from their interviews. I do this as well. AI transcription is excellent and virtually free.

Some are going further and using tools like Granola to turn those transcripts into organized notes. This can be helpful, but use it cautiously. Make sure the notes stay true to the transcript.

AI can also add metadata to your transcripts to make them more searchable. I do this with my own interview notes. I have an LLM automatically add structured data to each transcript: the date of the interview, the participant, their role and company, the topics we covered, and so forth. This makes it much easier to find the right interview when I need it.

2. AI as a Fresh Perspective

I strongly encourage each member of the product trio to synthesize each interview separately before discussing as a team what they learned. This doesn't have to be a big time investment. Think on the order of 15–20 minutes per interview.

When each person synthesizes the interview separately, we get three different perspectives on what was heard. AI can play the role of a fourth—or even fifth— perspective if you use more than one model.

I like to synthesize my interviews myself and then also run them through dedicated Claude and ChatGPT Projects that I've specifically set up to have the right synthesis context.

Because I also did the synthesis work, I am in a much better position to evaluate the LLM output. It might not save me time, but using AI synthesis as another perspective adds more nuance and context to each interview.

Sometimes the AI catches things that I missed. Sometimes it frames an opportunity a little differently than I might and it forces me to consider what I really heard.

I love collaborating with AI on interview synthesis.

My Workflow for Using AI as a Fresh Perspective

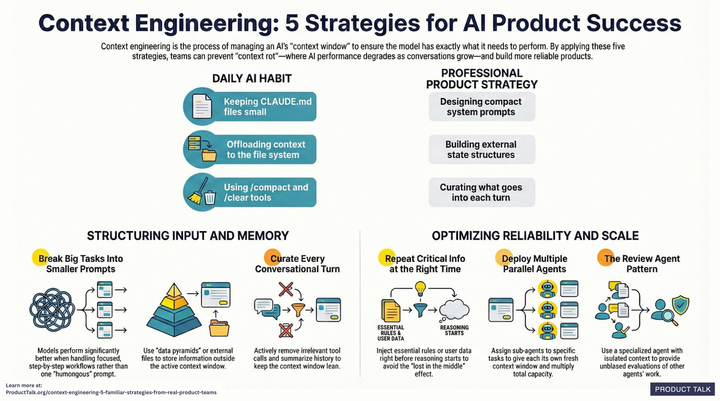

- Set up a dedicated space for doing AI synthesis. That space can be a ChatGPT Project or CustomGPT. It can be a NotebookLM folder. It could be a Claude Project or a Claude Code directory with its own claude.md file. The key is to set up persistent context and instructions.

- Define the right context. I include: the context for the research (in my world, that's my ideal customer profile, my current outcome, my current target opportunity, my research questions), some context on synthesis (e.g. resources on interview snapshots, opportunity solution trees, etc.), and instructions on how to do each step.

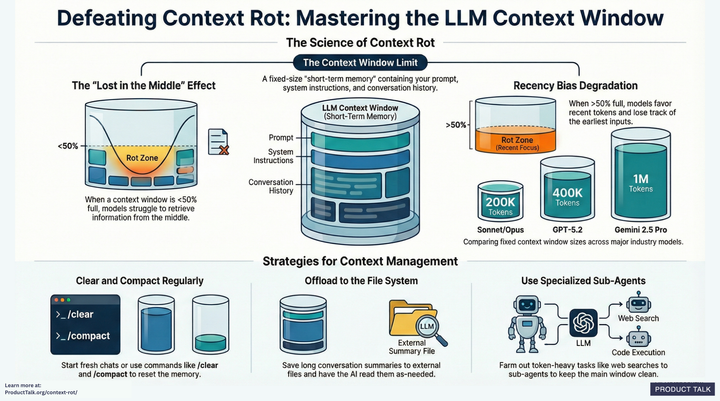

- Deliberately separate single interview synthesis from cross-interview synthesis. I use a new conversation for each interview to create interview snapshots. Once I've created all of my interview snapshots, I start a new conversation to work on cross-interview synthesis. This helps to avoid context rot and ensures that the LLM stays focused on the right task.

- Be disciplined about treating this like another perspective on your team and not absolute truth. As we've seen, LLMs (like humans) make mistakes. Treat this like yet another perspective. Examine it. Question it. Augment it. Use it to strengthen your own perspective; not replace it.

- Verify any opportunities that the AI found that you missed. LLMs have found opportunities that I missed. This is one of the reasons why I love AI synthesis as a collaborator. But you have to guard against hallucinations and bias. When an LLM finds something that you missed, go back to the source material and verify it's really in there.

My Favorite Tools for AI as a Fresh Perspective

I've experimented with several AI tools for interview synthesis. Here's what I've found works well:

- Claude (via Claude Projects or Claude Code): This is my go-to for collaborative synthesis. I set up a dedicated Project with my research context (ICP, current outcome, target opportunity) and use it to generate interview snapshots alongside my own synthesis. Claude handles long transcripts well and can hold multiple interviews in memory when doing cross-interview synthesis.

- ChatGPT (via CustomGPTs or Projects): Similar to Claude, but I find it useful to run the same interview through both models to get different perspectives. The CustomGPT feature lets you set persistent instructions, which is critical for maintaining synthesis quality.

- Granola: I use this for turning transcripts into organized notes. The key is reviewing the notes against the original transcript to catch any misinterpretations before using them for synthesis.

A word of caution: Most dedicated "interview analysis tools" focus on cross-interview synthesis (step 2) and skip single-interview synthesis (step 1). If you use these tools, make sure you're still synthesizing each interview individually before looking for patterns across interviews.

3. AI as a Customer Synthesis Teacher

Learning how to do high-quality synthesis is hard. It takes practice. It takes time.

I am excited about the potential of AI to help us learn synthesis skills. This is an opportunity that I am currently exploring for my upcoming course on the interview snapshot.

AI can already help us with opportunity framing by suggesting alternative framings. It can help us explore different interpretations of the same passage. It can help us identify when our opportunities are solutions in disguise.

I love using AI as a thought partner and coach. And my early experiments for how to use AI to teach high-quality synthesis are going well. I'll be sharing much more in this area soon.

Finally, we are just learning how to co-create with AI and I strongly suspect we will find ways to better support human synthesis with AI—the goal being to take advantage of both the speed of AI synthesis without losing the benefits of human synthesis.

In the next section, I'll explore what collaborative AI-aided synthesis looks like, we'll review relevant research, and I'll share how I'm already putting these ideas into practice.

An Open Question: Can AI Help Humans Do Better Synthesis Faster?

Two recent studies (see here and here) have found that working with AI can raise the performance of novices in a domain. Teams who have never done any interview synthesis may benefit from AI synthesis. And I want to encourage you to experiment with AI synthesis.

However, those same studies show that experts working with AI show the highest level of performance. An expert human collaborating with AI on interview synthesis may outperform both AI-only synthesis and even human-only synthesis. So I also want to encourage you to work on developing your human synthesis skills.

The sweet spot seems to be expert human synthesis aided by AI synthesis.

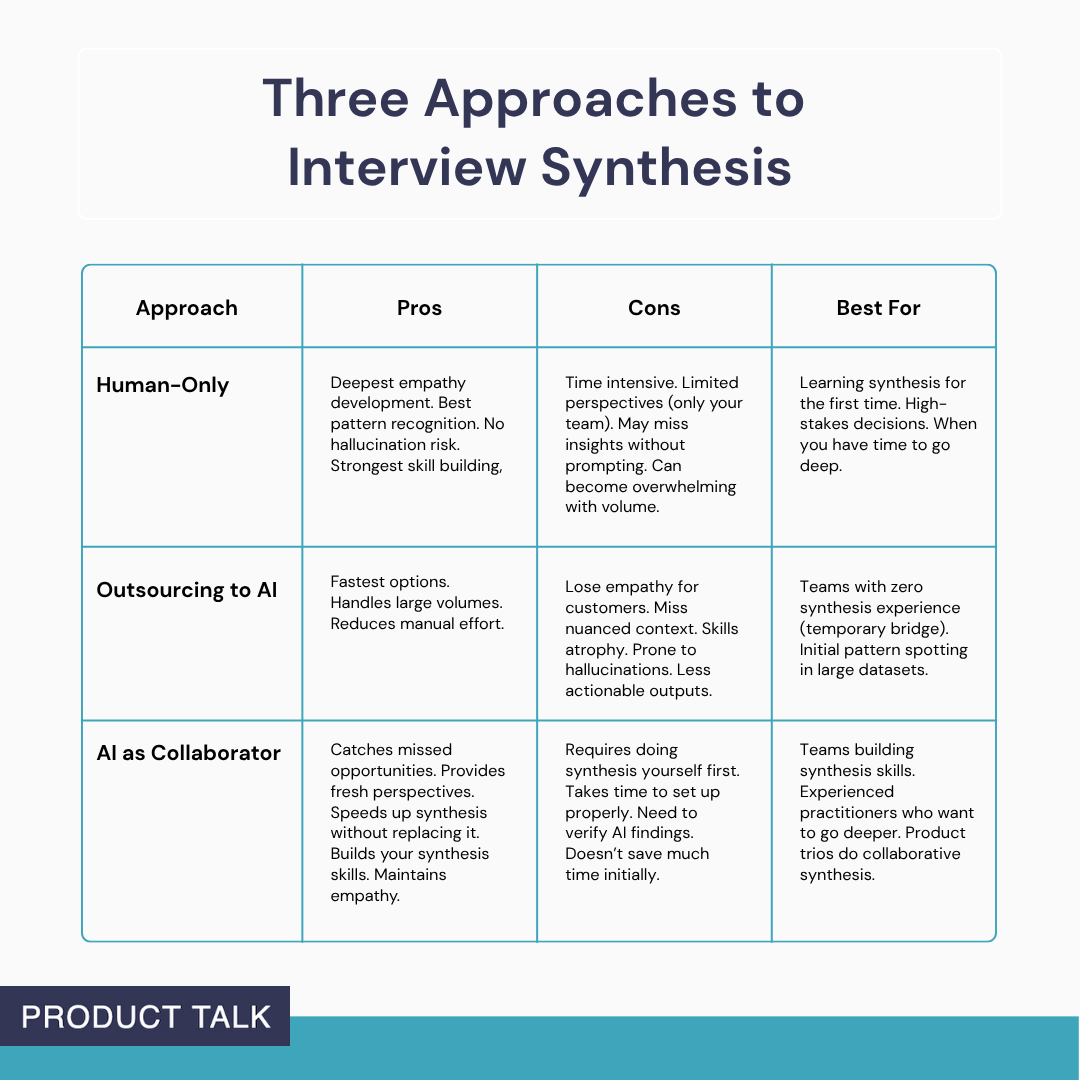

Three Approaches to Interview Synthesis

So how do you get to that sweet spot? It depends on where you're starting from. Here's how the three main approaches compare:

| Approach | Pros | Cons | Best For |

|---|---|---|---|

| Human-Only | Deepest empathy development. Best pattern recognition. No hallucination risk. Strongest skill building. | Time intensive. Limited perspectives (only your team). May miss insights without prompting. Can become overwhelming with volume. | Learning synthesis for the first time. High-stakes decisions. When you have time to go deep. |

| Outsourcing to AI | Fastest option. Handles large volumes. Reduces manual effort. | Lose empathy for customers. Miss nuanced context. Skills atrophy. Prone to hallucinations. Less actionable outputs. | Teams with zero synthesis experience (temporary bridge). Initial pattern spotting in large datasets. |

| AI as Collaborator | Catches missed opportunities. Provides fresh perspectives. Speeds up synthesis without replacing it. Builds your synthesis skills. Maintains empathy. | Requires doing synthesis yourself first. Takes time to set up properly. Need to verify AI findings. Doesn't save much time initially. | Teams building synthesis skills. Experienced practitioners who want to go deeper. Product trios do collaborative synthesis. |

My recommendation: Start with human-only synthesis until you understand what good looks like. Then shift to AI as collaborator once you can evaluate AI output quality. Only outsource to AI if you're genuinely blocked and need a temporary bridge—but plan to build your synthesis muscle.

What Does AI as a Collaborator Look Like?

Here's what I'm most interested in: Is there a way to leverage the speed of AI synthesis while also maintaining (and even growing) our human synthesis skill, while building empathy for our customers, and retaining ownership over our research and decision-making?

This is a tall order. But I think we can do it. Here's what it might look like.

Subscribe to unlock:

- How AI can facilitate expert human single-interview synthesis and cross-interview synthesis.

- Get a detailed look into how I'm putting this vision into practice today.