Q&A Session: Building AI Evals for the Interview Coach

I am falling deeper into the AI rabbit hole every day. Now, that’s not to say that I believe all the hype about AI. What I mean is that I’m excited to see what’s just now possible with AI (some may even say I’ve become a little obsessed with this topic).

Over the past few months, I have been building a new AI product, the Interview Coach. And I’ve committed to working out loud and sharing my learnings with you. My goal with these conversations is to add some nuance to discussions about AI, remove some of the mystery surrounding this topic, and help encourage anyone who’s feeling reluctant to start experimenting.

In August, I hosted my second “Behind the Scenes” webinar where I did a deep dive in the topic of building AI evals for my Interview Coach product. One of the reasons why I personally find evals such a compelling topic is that they give us a fast feedback loop that’s remarkably similar to what we get with continuous discovery. If you haven’t watched that webinar or read the transcript, you might want to start there first.

Today’s post is based on the lively Q&A session at the end of that webinar—I ended up staying an extra 30 minutes and still couldn’t address all the questions participants had submitted!

What tools and APIs are you using, and what are the costs associated with them?

Everyone is obsessed with tools, but I hope one of your takeaways from this talk is that you can start with the tools you already know and have. I still don't use an eval tool today. I'm writing my own evals in VS Code. I'm using a Jupyter Notebook to do my analysis. I'm not using LangChain, Langfuse, Arize, or Braintrust. The reason why I'm not is because they overwhelm me. I'm not ready for that. I also didn't want a tool’s perspective to influence my perspective while I was still learning.

What tools I'm using:

- I develop in a serverless environment. All of my evals are Lambda functions orchestrated via Step Functions.

- I use a mix of Anthropic and OpenAI models to support the Interview Coach. The Interview Coach is primarily Anthropic because I think Claude has fewer hallucinations when working with transcript data.

- A lot of my evals are OpenAI, and that's because I can use 4o-mini, and it's pretty low cost.

- I was using the Python library instructor to guarantee structured JSON output, but I stopped using it because it breaks prompt caching for Anthropic and I need prompt caching.

Cost management: Some transcripts can be really long, like 20,000 tokens. That can get very expensive because the LLM Coach is multiple LLM calls on the same transcript. So I cache the transcript. I'm paying for cached tokens on subsequent calls. If all of that is over your head, just know that when you’re building LLM tools, cost management is a big piece.

I'm down to $0.02 to $0.20 per transcript run. That's all-in—the Coach response plus all the evals. The variation in cost depends on the length of the transcript. I suspect that will continue to go down over time.

We do rate limit this for our students right now and we will be rate limiting it as part of the Vistaly beta, but it’s cheap enough that we can see how people want to use it and we can try to set rate limits based on what supports actually learning. We’re just trying to make sure that cost and pricing lines up.

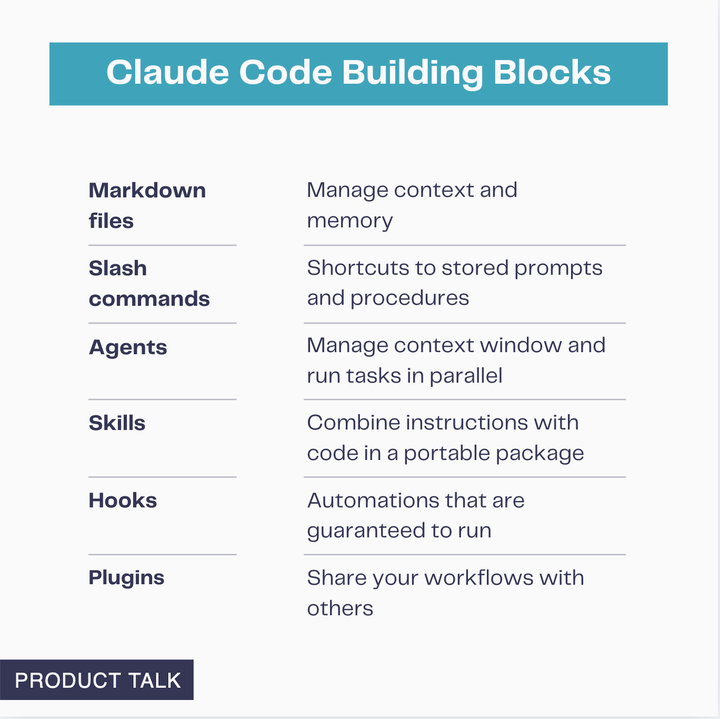

I use Claude Code—I had to bump up to the $100 a month subscription because I was maxing out the $17 a month subscription every hour.

Are all the evals you do done during development, or do you also have measurements and evaluations for the live production Coach?

Right now, because the only people using the Interview Coach are my course students, that's not super high volume. We do cohort-based courses where we have 20 to 50 students in a cohort, so I’m not looking at thousands and thousands of traces. I actually run my evals on every trace that's coming in.

We're about to release this to beta customers in Vistaly. We don't have a clear picture of how much they're going to use it. To start, I will probably run my evals on a percentage of traces. But once we have a sense of volume and I can do cost estimates, I would love to be able to run my evals on all traces, but we’ll have to assess costs first.

I do have a couple evals that run as guardrails. If you're not familiar with that term, you can set an eval as a guardrail, meaning it runs before you return a response to the user. The LLM generates the response, you run the guardrail. If the guardrail passes, you return it to the user. If the guardrail fails, you rerun the LLM response before you pass it to the user.

One of the guardrails I use is to make sure I'm getting appropriately structured JSON because I can't use instructor anymore. I have an eval that is parsing my JSON response, making sure it's valid. If it fails, it kicks back to the LLM to try again. I actually kick the JSON output to another LLM call and ask it to try to fix the output instead of rerunning the Coach, so that’s an example of a guardrail that runs in production.

I have proprietary content. You mentioned privacy—would you summarize once more how to protect our content when using the different tools?

First of all, Airtable, AWS, Zapier—these are SOC 2 compliant, good data processor tools. I'm not using somebody's personal project and sending interview transcripts to those tools.

The first thing is: If you're using third-party tools, you need to be aware of their data policies. Are they using any inputs for training? I've made sure that none of my tools do any of that stuff.

Student transcripts get stored in Airtable, but student transcripts are not real customer interviews. They're practice interviews from our course. Vistaly interviews will not be stored in Airtable. Only the LLM response will be stored in Airtable. That's because those are real customer interviews.

We're really transparent with the user about what we're collecting and how we're using it. I also have a data retention policy. I only keep traces for 90 days. I have a process that deletes any data that's older than 90 days.

You mentioned performance issues with Airtable. What would you have used instead?

I love Airtable. I use Airtable as my CRM, content editorial calendar, orders database—I use it a lot.

I've noticed in the last six months to a year, my bases load really slowly, even my bases that don't have a lot of records. With interview transcripts in particular, Airtable's performance is not great when you have a text cell with really long text.

I'm still using it. I haven't moved off of it yet, but I probably eventually will move to something like Supabase as my database for this.

Why do some traces have failure modes, but not annotations?

When I was first annotating my traces, I did annotations. As I gained more traces, sometimes when I was reviewing them, I didn't need to write out all that text. I had already uncovered failure modes. I just directly annotated them with failure modes.

If they have a failure mode but no annotation, it's because I annotated them after I came up with my failure mode taxonomy and I just recognized the error. That was from a second cycle of annotating.

What does it mean to have an AI-enabled product in terms of time to develop?

One thing I’m hearing a lot of AI engineers talk about is how can we have roadmaps if we can’t predict how long it’ll take to build an AI product because we don’t know how much work is going to be involved to get it to a good state. I’ve been working on this for four months now as a single developer and a lot of that time was just me learning to be an engineer.

I actually think when I build my next AI product, it’ll go way faster because I won’t have to learn Jupyter, Python, or dev or prod environments. And I’m also building this muscle of fast feedback loops and error analysis, so I expect the next one to go much faster, but it’s still really unpredictable and we still have to draw a line in the sand of when it’s good enough.

The Interview Coach is never going to be error-free. With LLMs, we’re getting non-deterministic output so it’ll never be error-free, but when is it good enough? And that’s always evolving.

Which API did you use for LLM-as-Judge and what did the cost look like?

When I'm writing a judge, I start with the best model to figure out what will work. Once I have the eval working, I gradually move to smaller and smaller models and I compare their performance. I'm looking for the smallest model that performs as well as the best model.

Most of my evals are very inexpensive. Let’s talk about simplicity for a moment. If I give an eval the prompt: "Here's a full coaching response. Are there any leading questions?" That's not a simple task. It's a lot of text, not all questions, lots of questions in there. That's a complex task.

If I give the LLM: "Here's one question. Is it a leading question? Yes or no?" That's a very simple task. Very simple tasks are cheap. For that particular eval, I'm using 4o-mini. I think I'm paying like 0.0001 cent. It's super cheap. Not even worth worrying about.

Did you have good transparency on your token usage for the coach and your evals?

When you make a call to an LLM in the response, it will tell you both input and output tokens used. You can log that and then you can use that data to calculate costs, so you can look at what each trace costs. I do log that because I am trying to figure out pricing, rate limits, and what this is going to cost us collectively as part of the partnership with Vistaly. When I store traces, I include input tokens and output tokens, whether or not they were cached input tokens, and then I calculate an estimated cost for that trace.

Do you run your evals in some sort of equivalent to a CI/CD pipeline and where in the interaction does this show up?

I'm really new to CI/CD—I just learned how to split my dev environment from my prod environment.

I don't release a change in the Interview Coach to production unless I've tested it on my evals. The way that I do that is I have a dev set and a test set.

My dev set is the set of traces that I'm using while I iterate on my evals, while I iterate on my prompts. I'm looking for: Am I getting the performance I expect?

My test set is a holdout set that once I think I have a change that's ready for release, I run that release on my test set. My test set is actually 100 traces, so it’s much bigger than my dev set, which is only 25 traces.

It's expensive to run that test set—I'm running 100 transcripts through the Coach. But that's telling me: Am I seeing what I saw perform in development work on my holdout set? If that works, I can feel confident that I'm going to run that.

This only works if my dev set and test set represent what I'm seeing in production. I'm constantly refining both my dev set and my test set to make sure they're matching the types of transcripts I'm seeing in my production traces.

I have to make sure my data sets represent the diversity of usage I might get in production.

To answer this question of whether I run my evals in a CI/CD pipeline: Not yet. I run them in my environment before I release. I am running evals on production traces right now. It’s not part of my deployment pipeline, but it’s part of my production process.

Do you aim for 100% in your test set?

No. In fact, in the class, one thing they talk about is if your evals are getting to 100%, they're saturated and they're no longer measuring anything. You don't want evals to hit 100% because remember: An eval is detecting an error. If you don't have that error in your data set, then you don't need to be measuring that error.

It’s theoretically possible that the error could come back, but as a general rule, evals that return 100% are generally indicating that you’re not measuring something meaningful.

I'm not looking for 100%. What I'm looking for is an A/B test. I made a change. I'm comparing my eval’s performance to what's in production versus what my change is. I'm looking across my evals and saying: Does the change look better? But no change is categorically better—it might be better on some evals and worse on some other evals. There's still a judgment call: Does this overall look better? Should I release it?

It sounds like the Interview Coach is the first feature of a comprehensive interview bot designed to manage the entire customer interview process. Have you already conducted a first experiment with such an advanced prototype?

The Interview Coach is just designed to give students feedback on their interviews. My goal is not to automate interviewing or to manage the entire customer interview process. It is very much just designed to be a teaching tool.

I’m curious about the code assertion eval you showed us—was it always the case that when someone uses words like typically and usually, they were asking a general question? Did you measure something like that percentage accuracy of the eval vs. manual annotations?

Yes, exactly. On one of the slides, I showed my most recent run of that eval compared to human data. My eval is finding that only 1 out of 25 traces have that error. This eval is 100% aligned with my judgment. Whenever the judge finds an error, I also found an error. If I find errors that it doesn't find, it's teeny-tiny—like 1% or less.

For me, this is no longer an eval I spend time on because the error rate is so low. It's very effective, it’s very aligned. I still run it because I'm still looking for: Is it staying aligned? I still run it, score it, compare it to human labels because I want to make sure it stays aligned.

Where did you end up getting non-synthetic interview transcripts at the very beginning to test the Interview Coach?

I did a couple of things. In the class (get 35% off when you purchase through my affiliate link), we learn how to generate realistic synthetic data. I struggled with synthetic data at the full transcript level. What I was able to do was synthetic data at the individual dimension level. I could generate some interviewer turns, and I could test my individual dimensions.

My initial set of traces came from my own interviews, some of my instructors' interviews. I asked some of the alumni of our courses to submit interviews. I used a lot of my alumni as our beta group.

It looks like you have five evals. Is your Interview Coach also built with separate mini coaches to get at different lenses?

It's not a mega prompt. It started as one prompt, it evolved into a workflow. It will probably eventually evolve into an agent.

Each dimension is its own prompt. Evals have prompts. There's some helper prompts. There's actually many LLM calls involved in a single Coach response.

This is something I covered in the first Behind the Scenes webinar when I talked about architecture patterns, so if you want to learn more, make sure to check that out, as well as my blog post Building My First AI Product: 6 Lessons from My 90-Day Deep Dive.

Was your STS eval an LLM-as-Judge?

No, it was actually a really simple code assertion that works remarkably well—and it helped me uncover what my own intuition was trying to measure.

Which roles do you see doing each of these activities in teams?

I think with evals, we really need tight collaboration between engineers and product managers and designers, because we do need domain expertise, but we also need engineering skills.

Does constantly rerunning your evals get expensive?

It costs me about $3 to run my evals on my dev set, but that's if I run everything. My evals are designed to be really cheap, but they depend on these helper functions. The helper functions extract data from either the transcript or the LLM response.

If the transcript isn't changing, I don't have to keep rerunning those helper functions. What I do in my development environment is I run all those helper functions first, and that's about $2.40 of that $3. Then I can rerun evals and not rerun the helpers. It's only costing me 50 or 60 cents. I've architected it to manage costs.

What's your outlook on working as a product team in the AI era? We hear a lot about different roles becoming redundant or roles crossing into other roles.

I've always been a boundary spanner. I mentioned I was a front-end engineer and a designer and then moved into product management. I think we should have less boundaries between our roles. We should learn a lot more about how each other works, and I think AI is going to enable that. We're going to get better at collaborating when we know more about each other's jobs.

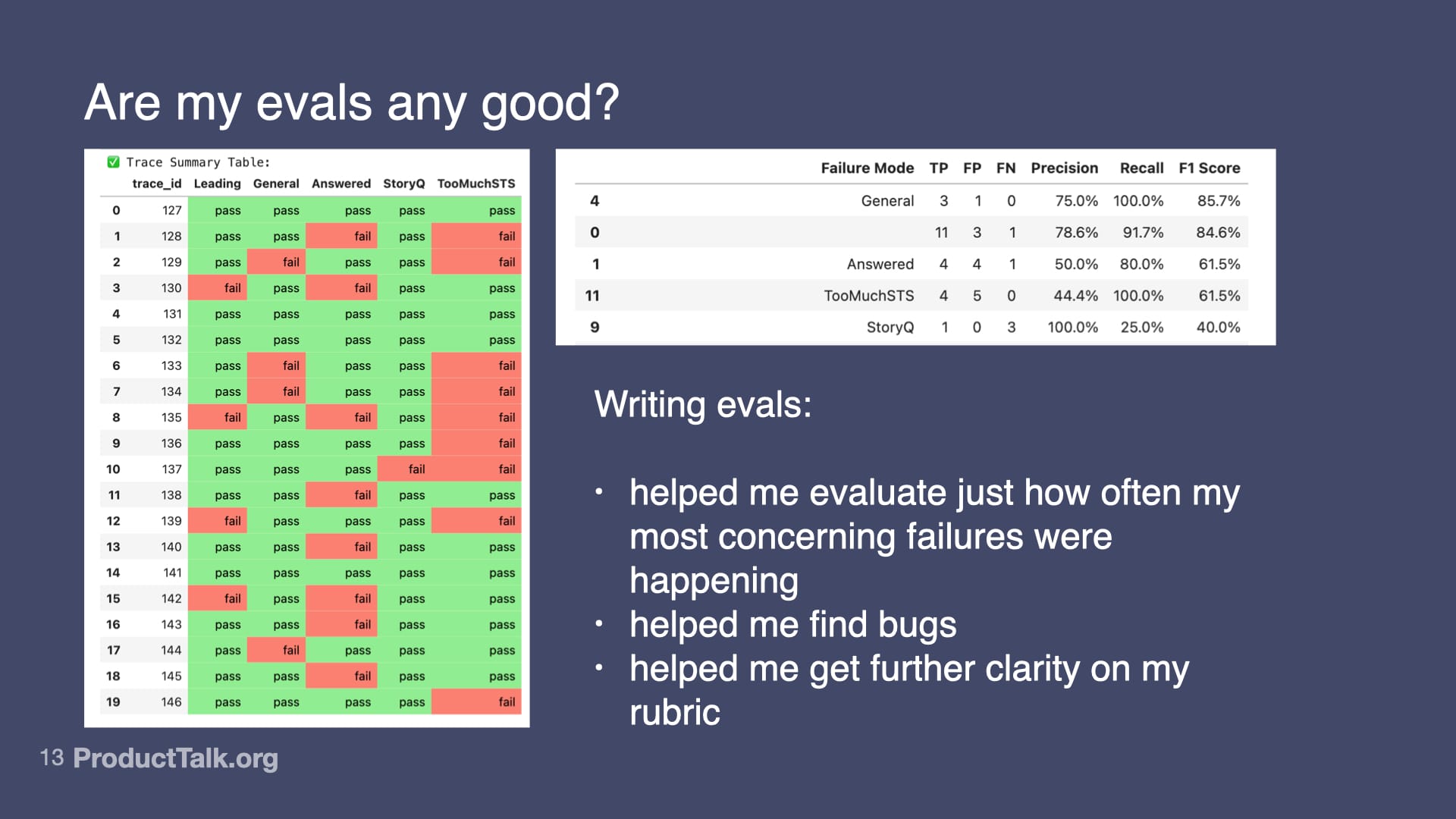

How do you know if your evals themselves are right?

That's what these scores are doing. On the “Are my evals any good?” slide, this table is comparing my eval output to my human-labeled output. This table is helping me understand how aligned my evals are with my own judgment.

It's really important to do this because your evals could just be wrong. It's really important to do this on a percentage of traces over time because your evals can drift with time. This is a critical step and you should make sure not to skip it.

Why did you choose Lambdas? Was this the easiest?

Three or four years ago, I started automating some of my business logic. I didn't really want to get involved in running software and having to have a server somewhere. Serverless fit that need really well.

When I started working on this, I started using the tools I was familiar with. It’s that simple. I just didn't want to maintain an EC2 instance or anything like that.

It actually works surprisingly well. I know a lot of people are using AI-specific orchestration tools, but I'm finding Step Functions work just fine.

Eventually as I move more into an agent model, I'll probably have to upgrade into one of those tools. But for now this is more than adequate.

This allows me to delay having to learn a new thing. This is how I prevent being overwhelmed. I just look at: What's the next tiny piece I have to chip off? And I know eventually I’ll have to learn some of those more AI-specific tools because I do want the Coach eventually to be an agent.

How did you decide on the AI tools you’re using?

I mentioned Claude Code—I do use both OpenAI and Anthropic’s models through the API and through the web browser extensively. I particularly like Claude Code right now because it lives in my IDE and can see all the context of my whole repo. And I really love the Claude Code interface. That’s just a personal preference. I know some people are loving GPT5 for coding. I’ll probably experiment with that at some point.

But I’m trying to focus on building products. I don’t really want to spend a lot of time trying anything and everything under the sun. I just pick up what I need based on my goal. I’m a very goal-driven learner. I’m trying to do a thing, what’s the thing I kind of understand that will help me do that thing? So I’m not optimizing or trying to maximize my tools—I’m really satisficing. What gets me there?

Do you annotate and assign failure modes based on pieces of transcripts like you would with qual coding in research?

Most of my annotations are about the Coach's response—it’s more about what the Coach is telling the student rather than the interview. I do have some transcripts where as an instructor, I have tagged what's good and what's not good in detail. I use that as part of my training set. The term training set is a little misleading here—I'm not doing any fine-tuning. But I use my training set for n-shot examples in my prompts. I have done really in-depth human labeling of a small number of transcripts to help improve my prompts.

How often do you use the Claude-generated app to update your evals?

I'm assuming you mean my annotation tool. I am constantly human-labeling traces. If I do a new eval, I need to make sure I have human labels that correspond with that new eval. As I reduce errors through evals and improvements, then I prioritize the next level of errors. That ends up meaning I have to do more human labeling. It's kind of never-ending.

Did you try to work with LLMs in voice mode?

I have Wispr Flow through the Lenny bundle and I keep meaning to try it. I think it would drive my husband nuts because he works right across the hall so I haven’t started it yet, but we’ll see.

It sounds like this was your main project for the past few months. Is that right? Just to get a better perspective on how much effort you put into it since April.

It's hard for me to quantify this. I have been working on this as my primary project, but I've also written several blog posts. I run a course business. I manage our sales process. I'm a company of one. I still write social media posts. I do plenty of other stuff. But I have been working on the weekends and in the evenings just because I'm literally having so much fun.

If I were to equate this to a 40-hour week, I would guess I’ve spent about 60 to 70% of my time on it. But I also know that I probably suck at estimates, so take that with a grain of salt.

When you use the LLM to learn about evals and coding, how did you assess if you can trust the reply of the LLM?

I just experimented. I would ask o3 or Claude Opus. I would try things out. This is where the notebook was great. It's just throwaway code. I would try things out, see if it worked, see if it didn't work.

I don't really outsource things to LLMs. I like to use them as thought partners. Because I'm learning, I don't just take code and run it from an LLM. I beat it up. I make sure I understand it. I ask a lot of questions.

I do a lot of asking, "What does this line do? I don't quite get it." Because I want to make sure I really understand my code base. I want to make sure my evals are doing what I think they're doing. I want to make sure my data analysis is doing what I think it's doing.

I'm not vibe coding this. I am relying a lot on Claude Code, but not in a vibe coding sense—in a pair programming thought partner sense. I still feel responsible for my code. I'm making sure that I really understand all of it.

How do you analyze and evaluate a long LLM interaction like an interview? Is it per response? And if so, does it include all the previous responses?

The Interview Coach isn’t a multi-turn product. Students submit their transcripts and they get emailed feedback.

So I don’t have experience with multi-turn interactions because this is literally the only AI product that I have built so far. But I know in the class that I took there is a whole module on multi-turn conversations and how to evaluate them, so if you want to learn more about that, I do recommend checking out that AI evals course (get 35% off when you purchase through my affiliate link).

How did you manage the roadblocks?

I think with vibe coding, we get stuck because we don't understand what the LLM is doing. And I’ve vibe coded—I’ve built things with Lovable and Replit. With Replit I got stuck several times and with Lovable I got stuck and had to start over with an app. With vibe coding, I'm not even looking at the code. I'm just assuming the LLM is doing the right thing.

This is really different. I'm having a conversation with the LLM about how to write the code. If it's generating the code, I'm reviewing it and making sure I understand every line of it. If something doesn't make sense to me, if I don't like a pattern, I debate with the LLM.

Think about this more like I'm sitting with another engineer and we're constantly having a conversation about how to build a thing where I'm hands-on with the code. As a result, I'm not getting stuck because I understand the code. This is very different from black box vibe coding, hoping I don't get stuck.

Have you thought about teaching a course on this?

I have, but the challenge is this AI evals course (get 35% off when you purchase through my affiliate link) is really good. I don't think I can do a better job than them. Their course is very technical. Maybe eventually I'll do something on this for less technical folks.

But I'm not trying to race to be first. I think I care about quality too much. I don't really feel like an expert yet. Maybe that's a little bit of imposter syndrome on my part. There's a lot of noise out there and I'm trying really hard not to contribute to the noise and just share my experience.

How do you get from where you were at the beginning of May to where you are now from a technical standpoint? What is the best way to learn how to set up Jupyter Notebooks for my code base?

I just started with ChatGPT and I just said, "I'm writing pseudocode in an Apple Note. I'm getting stuck. It turns my quotes into smart quotes. It's breaking my code. My husband says to use a Jupyter Notebook. Can you help me get started? I'm a total beginner."

That's what my prompts look like. My personal prompts are garbage. I just literally talk to ChatGPT like it's a friend that won't make fun of me for my dumb questions. Because we can go back and forth and refine, it just guides me.

We've never had a tool like this before. We literally can learn anything. I think this is the thing that's most exciting to me.

So I would say just start somewhere. And if you already have code to play with, I would just start with Claude Code. Although I will say notebooks in VS Code aren’t as nice as notebooks in JupyterLab. So maybe if you’re new to notebooks, I’d start with JupyterLab and run Claude Code in a terminal or have ChatGPT open in another window because the JupyterLab experience is much nicer than VS Code.

Can we make this using Lovable or is Claude Code better?

I’m a big fan of Lovable—in fact we published a blog post on 11 real-world stories of how product teams are using Lovable—so this is not a dis on Lovable. What I like about Claude Code and VS Code over Lovable is I am hands-on in the code. It’s not a black box. It’s my repo, it’s my code, it’s my responsibility. And I’m pair programming with Claude Code. It’s a lot harder to do that with these AI prototyping tools.

What if your tweak caused the Coach output to generate a new class of errors that wasn't tracked by the evals?

This is a really great question. It is very often the case that I'll fix one error and then a new error will start to pop up. This is why I'm continuously human labeling while I am continuously running evals on production traces, because I do think this is a continuous investment. I don't think the Interview Coach will ever be done. I think when we build AI products, we have to commit to evolving them continuously over time because they will drift. If you care about quality, it will require a continuous investment.

If this was helpful, do me a favor and reply via email or reach out on LinkedIn or Twitter to let me know. I’d love to get feedback on how this is resonating.

Comments ()